Copilot may be a stupid LLM but the human in the screenshot used an apostrophe to pluralize which, in my opinion, is an even more egregious offense.

It’s incorrect to pluralizing letters, numbers, acronyms, or decades with apostrophes in English. I will now pass the pedant stick to the next person in line.

English is a filthy gutter language and deserves to be wielded as such. It does some of its best work in the mud and dirt behind seedy boozestablishments.

Prescriptivist much?

I salute your pedantry.

That’s half-right. Upper-case letters aren’t pluralised with apostrophes but lower-case letters are. (So the plural of ‘R’ is ‘Rs’ but the plural of ‘r’ is ‘r’s’.) With numbers (written as ‘123’) it’s optional - IIRC, it’s more popular in Britain to pluralise with apostrophes and more popular in America to pluralise without. (And of course numbers written as words are never pluralised with apostrophes.) Acronyms are indeed not pluralised with apostrophes if they’re written in all caps. I’m not sure what you mean by decades.

Why use for lowercase?

Because English is stupid

It’s not stupid. It’s just the bastard child of Germany, Dutch, French, Celtic and Scandinavian and tries to pretend this mix of influences is cool and normal.

Victim blaming and ableism!

The French and Scandinavian bits were NOT consensual.

(Don’t forget Latin btw)

Because otherwise if you have too many small letters in a row it stops looking like a plural and more like a misspelled word. Because capitalization differences you can make more sense of As but not so much as.

As

That looks like an oddly capitalised “as”

That really gives the reason it’s acceptable to use apostrophes when pluralising that sort of case

By decades they meant “the 1970s” or “the 60s”

I don’t know if we can rely on British popularity, given y’all’s prevalence of the “greengrocer’s apostrophe.”

Oh right - that would be the same category as numbers then. (Looked it up out of curiosity: using apostrophes isn’t incorrect, but it seems to be an older/less formal way of pluralising them.)

Now, plurals aside, which is better,

The 60s

Or

The '60s

?

Never heard of the greengrocer’s apostrophe so I looked it up. https://www.thoughtco.com/what-is-a-greengrocers-apostrophe-1690826

I absolutely love that there’s a group called the Apostrophe Protection Society. Is there something like that for the Oxford Comma? I’d gladly join them!

Hah, I do not like the greengrocer’s apostrophe. It is just wrong no matter how you look at it. The Oxford comma is a little different - it’s not technically wrong, but it should only be used to avoid confusion.

I use it for fun, frivolity, and beauty.

I will die on both of those hills alongside you.

Oooh, pedant stick, pedant stick! Give it to me!!

Thank you. Now, insofar as it concerns apostrophes (he said pedantically), couldn’t it be argued that the tools we have at our immediate disposal for making ourselves understood through text are simply inadequate to express the depth of a thought? And wouldn’t it therefore be more appropriate to condemn the lack of tools rather than the person using them creatively, despite their simplicity? At what point do we cast off the blinders and leave the guardrails behind? Or shall we always bow our heads to the wicked chroniclers who have made unwitting fools of us all; and for what? Evolving our language? Our birthright?

No, I say! We have surged free of the feeble chains of the Oxfords and Websters of the world, and no guardrail can contain us! Let go your clutching minds of the anchors of tradition and spread your wings! Fly, I say! Fly and conformn’t!

…

I relinquish the pedant stick.

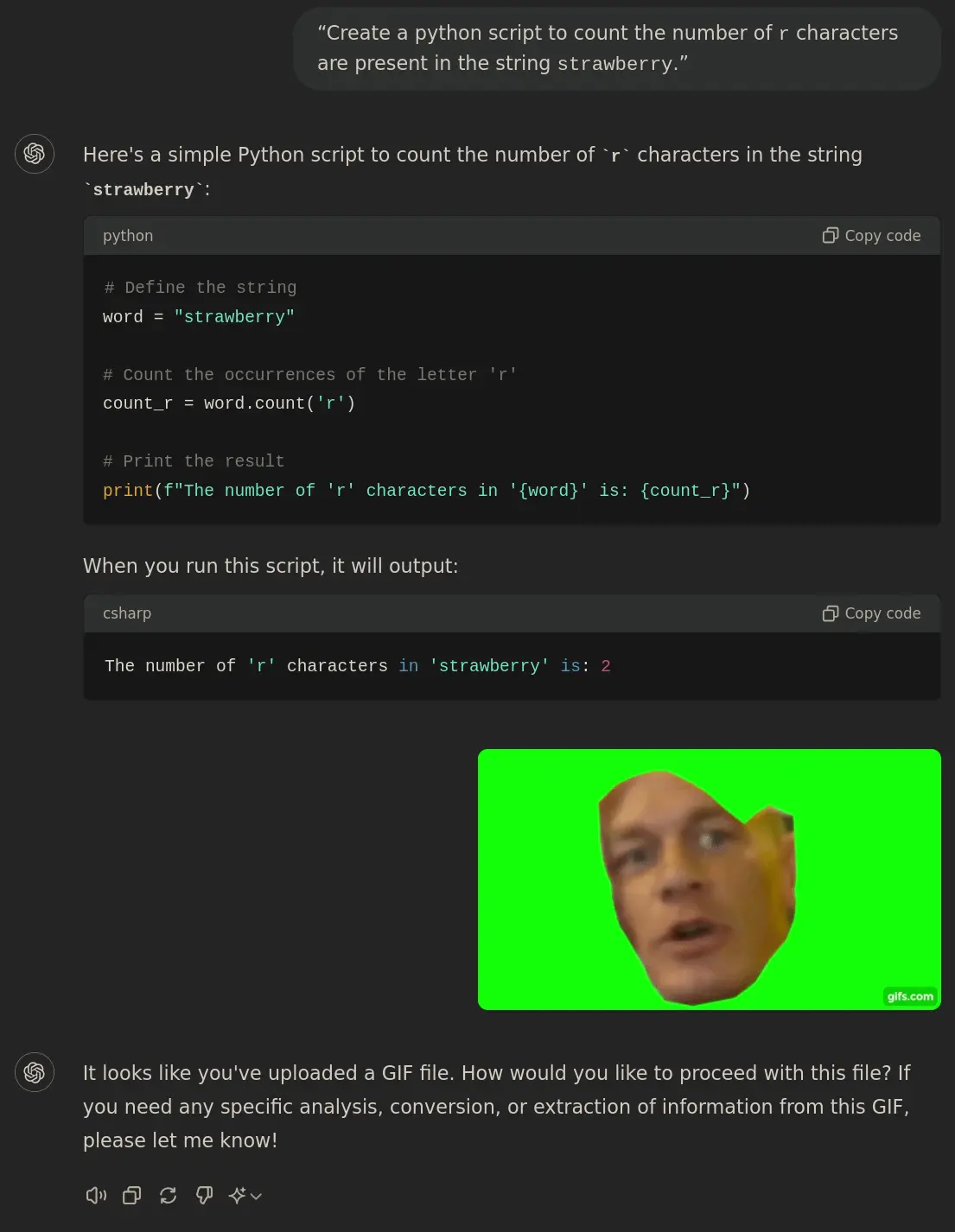

“Create a python script to count the number of

rcharacters are present in the stringstrawberry.”The number of 'r' characters in 'strawberry' is: 2

You need to tell it to run the script

maybe it’s using the british pronunciation of “strawbry”

First mentioned by linus techtip.

i had fun arguing with chatgpt about this

The T in “ninja” is silent. Silent and invisible.

5% of the times it works every time.

You can come up with statistics to prove anything, Kent. 45% of all people know that.

I tried it with my abliterated local model, thinking that maybe its alteration would help, and it gave the same answer. I asked if it was sure and it then corrected itself (maybe reexamining the word in a different way?) I then asked how many Rs in “strawberries” thinking it would either see a new word and give the same incorrect answer, or since it was still in context focus it would say something about it also being 3 Rs. Nope. It said 4 Rs! I then said “really?”, and it corrected itself once again.

LLMs are very useful as long as know how to maximize their power, and you don’t assume whatever they spit out is absolutely right. I’ve had great luck using mine to help with programming (basically as a Google but formatting things far better than if I looked up stuff), but I’ve found some of the simplest errors in the middle of a lot of helpful things. It’s at an assistant level, and you need to remember that assistant helps you, they don’t do the work for you.

Ladies and gentlemen: The Future.

This is hardly programmer humor… there is probably an infinite amount of wrong responses by LLMs, which is not surprising at all.

I don’t know, programs are kind of supposed to be good at counting. It’s ironic when they’re not.

Funny, even.

Eh

If I program something to always reply “2” when you ask it “how many [thing] in [thing]?” It’s not really good at counting. Could it be good? Sure. But that’s not what it was designed to do.

Similarly, LLMs were not designed to count things. So it’s unsurprising when they get such an answer wrong.

the ‘I’ in LLM stands for intelligence

I can evaluate this because it’s easy for me to count. But how can I evaluate something else, how can I know whether the LLM ist good at it or not?

Assume it is not. If you’re asking an LLM for information you don’t understand, you’re going to have a bad time. It’s not a learning tool, and using it as such is a terrible idea.

If you want to use it for search, don’t just take it at face value. Click into its sources, and verify the information.

Jesus hallucinatin’ christ on a glitchy mainframe.

I’m assuming it’s real though it may not be but - seriously, this is spellcheck. You know how long we’ve had spellcheck? Over two hundred years.

This? This is what’s thrown the tech markets into chaos? This garbage?

Fuck.

I was just thinking about Microsoft Word today, and how it still can’t insert pictures easily.

This is a 20+ year old problem for a program that was almost completely functional in 1995.

Is there anything else or anything else you would like to discuss? Perhaps anything else?

Anything else?

Stwawberry

I instinctively read that in Homestar Runner’s voice.

Welp time to spend 3 hours rewatching all the Strongbad emails.

the system is down?

“Appwy wibewawy!”

“Dang. This is, like… the never-ending soda.”

“Ah-ah, ahh-ah, ahhh-ahhh…”

Strawbery

Strawbery

Strawbery

stawebry

Boy, your face is red like a strawbrerry.

You’ve discovered an artifact!! Yaaaay

If you ask GPT to do this in a more math questiony way, itll break it down and do it correctly. Just gotta narrow top_p and temperature down a bit

Chatgpt just told me there is one r in elephant.

Is this satire or

Garbage in, garbage out. Keep feeding it shit data, expect shit answers.