From time to time I see this pattern in memes, but what is the original meme / situation?

Out of topic but how does one get a profile pic on lemmy? Also love you ken.

you can configure it in the web interface. just go to your profile

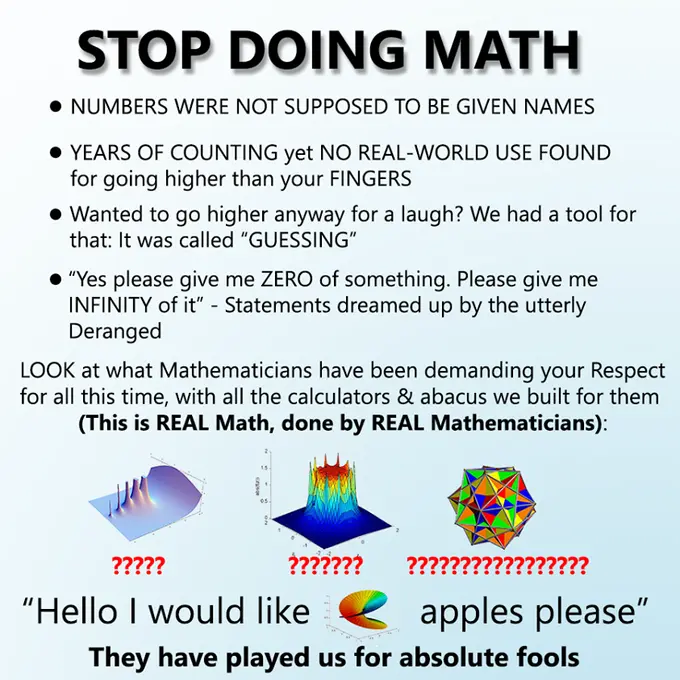

It’s my favourite format. I think the original was ‘stop doing math’

Thank you 😁

There are probably a lot of scientific applications (e.g. statistics, audio, 3D graphics) where exponential notation is the norm and there’s an understanding about precision and significant digits/bits. It’s a space where fixed-point would absolutely destroy performance, because you’d need as many bits as required to store your largest terms. Yes,

NaNand negative zero are utter disasters in the corners of the IEEE spec, but so is trying to do math with 256bit integers.For a practical explanation about how stark a difference this is, the PlayStation (one) uses an integer z-buffer (“fixed point”). This is responsible for the vertex popping/warping that the platform is known for. Floating-point z-buffers became the norm almost immediately after the console’s launch, and we’ve used them ever since.

That doesn’t really answer the question, which is about the origins of the meme templete

No real use you say? How would they engineer boats without floats?

Just build submarines, smh my head.

Just invert a sink.

Stop using floats

Call me when you found a way to encode transcendental numbers.

May I propose a dedicated circuit (analog because you can only ever approximate their value) that stores and returns transcendental/irrational numbers exclusively? We can just assume they’re going to be whatever value we need whenever we need them.

Wouldn’t noise in the circuit mean it’d only be reliable to certain level of precision, anyway?

I mean, every irrational number used in computation is reliable to a certain level of precision. Just because the current (heh) methods aren’t precise enough doesn’t mean they’ll never be.

Do we even have a good way of encoding them in real life without computers?

Just think about them real hard

Here you go

ⲡ

Perhaps you can encode them as computation (i.e. a function of arbitrary precision)

Floats are only great if you deal with numbers that have no needs for precision and accuracy. Want to calculate the F cost of an a* node? Floats are good enough.

But every time I need to get any kind of accuracy, I go straight for actual decimal numbers. Unless you are in extreme scenarios, you can afford the extra 64 to 256 bits in your memory

Float is bloat!

The problem is, that most languages have no native support other than 32 or 64 bit floats and some representations on the wire don’t either. And most underlying processors don’t have arbitrary precision support either.

So either you choose speed and sacrifice precision, or you choose precision and sacrifice speed. The architecture might not support arbitrary precision but most languages have a bignum/bigdecimal library that will do it more slowly. It might be necessary to marshal or store those values in databases or over the wire in whatever hacky way necessary (e.g. encapsulating values in a string).

uses 64 bit double instead

I actually hate floats. Integers all the way (unless i have no other choice)

Based and precision pilled.

Integers have fallen billions must use long float

While we’re at it, what the hell is -0 and how does it differ from 0?

For integers it really doesn’t exist. An algorithm for multiplying an integer with -1 is: Invert all bits and add 1 to the right-most bit. You can do that for 0 of course, it won’t hurt.

It’s the negative version

So it’s just like 0 but with an evil goatee?

Look at the graph of y=tan(x)+ⲡ/2

-0 and +0 are completely different.

Obviously floating point is of huge benefit for many audio dsp calculations, from my observations (non-programmer, just long time DAW user, from back in the day when fixed point with relatively low accumulators was often what we had to work with, versus now when 64bit floating point for processing happens more as the rule) - e.g. fixed point equalizers can potentially lead to dc offset in the results. I don’t think peeps would be getting as close to modeling non-linear behavior of analog processors with just fixed point math either.

Audio, like a lot of physical systems, involve logarithmic scales, which is where floating-point shines. Problem is, all the other physical systems, which are not logarithmic, only get to eat the scraps left over by IEEE 754. Floating point is a scam!

I have been thinking that maybe modern programming languages should move away from supporting IEEE 754 all within one data type.

Like, we’ve figured out that having a

nullvalue for everything always is a terrible idea. Instead, we’ve started encoding potential absence into our type system withOptionorResulttypes, which also encourages dealing with such absence at the edges of our program, where it should be done.Well,

NaNisnullall over again. Instead, we could make the division operator an associated function which returns aResult<f64>and disallowf64from ever beingNaN.My main concern is interop with the outside world. So, I guess, there would still need to be a IEEE 754 compliant data type. But we could call it

ieee_754_f64to really get on the nerves of anyone wanting to use it when it’s not strictly necessary.Well, and my secondary concern, which is that AI models would still want to just calculate with tons of floats, without error-handling at every intermediate step, even if it sometimes means that the end result is a shitty vector of

NaNs, that would be supported with that, too.Nan isn’t like null at all. It doesn’t mean there isn’t anything. It means the result of the operation is not a number that can be represented.

The only option is that operations that would result in nan are errors. Which doesn’t seem like a great solution.

Well, that is what I meant. That

NaNis effectively an error state. It’s only likenullin that any float can be in this error state, because you can’t rule out this error state via the type system.Why do you feel like it’s not a great solution to make

NaNan explicit error?Theres plenty of cases where I would like to do some large calculation that can potentially give a NaN at many intermediate steps. I prefer to check for the NaN at the end of the calculation, rather than have a bunch of checks in every intermediate step.

How I handle the failed calculation is rarely dependent on which intermediate step gave a NaN.

This feels like people want to take away a tool that makes development in the engineering world a whole lot easier because “null bad”, or because they can’t see the use of multiplying 1e27 with 1e-30.

Well, I’m not saying that I want to take tools away. I’m explicitly saying that a

ieee_754_f64type could exist. I just want it to be named annoyingly, so anyone who doesn’t know why they should use it, will avoid it.If you chain a whole bunch of calculations where you don’t care for

NaN, that’s also perfectly unproblematic. I just think, it would be helpful to:- Nudge people towards doing a

NaNcheck after such a chain of calculations, because it can be a real pain, if you don’t do it. - Document in the type system that this check has already taken place. If you know that a float can’t be

NaN, then you have guarantees that, for example, addition will never produce aNaN. It allows you to remove some of the defensive checks, you might have felt the need to perform on parameters.

Special cases are allowed to exist and shouldn’t be made noticeably more annoying. I just want it to not be the default, because it’s more dangerous and in the average applications, lots of floats are just passed through, so it would make sense to block

NaNs right away.- Nudge people towards doing a

It doesn’t have to “error” if the result case is offered and handled.

Float processing is at the hardware level. It needs a way to signal when an unrepresented value would be returned.

My thinking is that a call to the safe division method would check after the division, whether the result is a

NaN. And if it is, then it returns an Error-value, which you can handle.Obviously, you could do the same with a

NaNby just throwing an if-else after any division statement, but I would like to enforce it in the type system that this check is done.I feel like that’s adding overhead to every operation to catch the few operations that could result in a nan.

But I guess you could provide alternative safe versions of float operations to account for this. Which may be what you meant thinking about it lol

While I get your proposal, I’d think this would make dealing with float hell. Do you really want to

.unwrap()every time you deal with it? Surely not.One thing that would be great, is that the

/operator could work betweenResultandf64, as well as betweenResultandResult. Would be like doing a.map(|left| left / right)operation.I agree with moving away from

floats but I have a far simpler proposal… just use a struct of two integers - a value and an offset. If you want to make it an IEEE standard where the offset is a four bit signed value and the value is just a 28 or 60 bit regular old integer then sure - but I can count the number of times I used floats on one hand and I can count the number of times I wouldn’t have been better off just using two integers on -0 hands.Floats specifically solve the issue of how to store a ln absurdly large range of values in an extremely modest amount of space - that’s not a problem we need to generalize a solution for. In most cases having values up to the million magnitude with three decimals of precision is good enough. Generally speaking when you do float arithmetic your numbers will be with an order of magnitude or two… most people aren’t adding the length of the universe in seconds to the width of an atom in meters… and if they are floats don’t work anyways.

I think the concept of having a fractionally defined value with a magnitude offset was just deeply flawed from the get-go - we need some way to deal with decimal values on computers but expressing those values as fractions is needlessly imprecise.

The meme is right for once