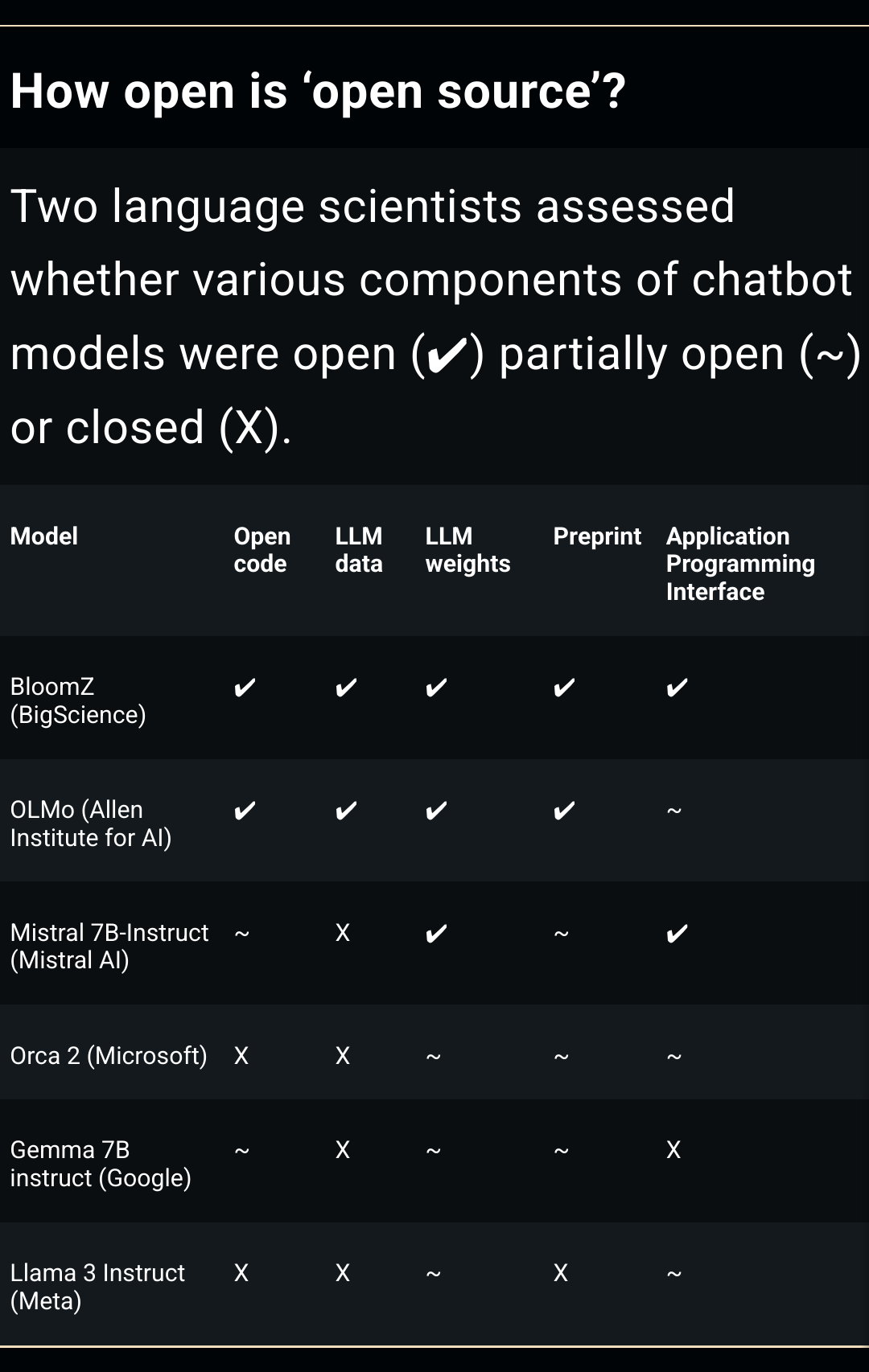

Context: Gemma is a group of free-to-use AI models with a focus on being small. According to benchmarks this outperforms Llama 3.

Just to be clear, Gemma is only partially open sourced in select area’s of the code.

how are the weights partially open?

Only portions of the code are published while the rest is kept under wraps. Classic corporate America bs finding a loop hole to use a trendy term.

neural network weights are just files, collections of numbers forming matrices; how is a partially open collection of weights of any use

the weights are open

$ docker exec -it ollama ollama show gemma:7b Model arch gemma parameters 9B quantization Q4_0 context length 8192 embedding length 3072 Parameters stop "<start_of_turn>" stop "<end_of_turn>" penalize_newline false repeat_penalty 1 License Gemma Terms of Use Last modified: February 21, 2024Since there is a user acceptance policy that restricts what you can do with the model that might be considered “partially” open.

Yeah you can see the weights, but it seems you are limited on what you can do with the weights. How we’ve gotten to the point you can protect these random numbers that I’ve shared with you through a UA is beyond me.

the same happens with BloomZ, and that is listed as open

Wasn’t aware of that, I was just taking a guess.

That being said I wouldn’t consider either open given those restrictions.

It’s already on Ollama. Exciting times!

I’m looking at the HuggingFace leader boards and Gemma isn’t even top 10. How does it stack up against Llama3 or Mistral-Open-Orca?

It’s currently number 11 in the LMSys chatbot leaderboard. It’s sitting above Llama 3 and Claude 3 Sonnet.